What is Google's new UI tool Project Genie?

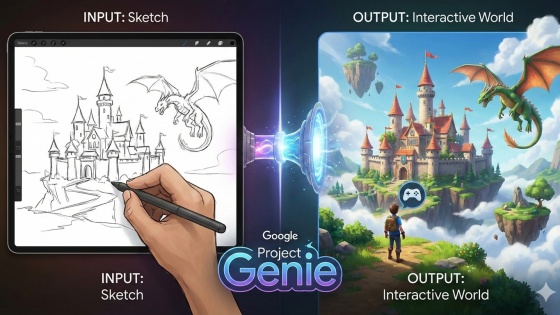

A world where a sketch drawn on a piece of paper or a simple photo of the forest behind your house instantly becomes an interactive video game that you can actually play. This is not the dream of 1990s science fiction fans, but the reality being built by Google's DeepMind research lab. Their latest achievement, called Project Genie (Generative Interactive Environment), heralds the end of an era when creating digital worlds was limited to programmers and designers with years of experience.

Currently, Project Genie is only available to Google AI Ultra subscribers in the US, for which users pay a little over $250 per month.

What is Project Genie anyway?

Genie is the world's first "generative interactive world model." While we've become accustomed to chatbots like ChatGPT that generate text and tools like Midjourney that create images over the past two years, Genie goes a step further. It doesn't just create static content, but living, responsive spaces.

Genie is an artificial intelligence model that has been trained on over 200,000 hours of 2D platform game videos from the internet. The key difference between this model and others is that Genie trained without any additional information about which buttons were pressed or how the characters moved. It independently figured out which parts of the image were “playable,” what happens if the character moves left, and how gravity affects objects in the virtual world.

This means that Genie is not just a video generator. You could say that it is a game engine powered by artificial intelligence. When you give it an image, it not only predicts the next set of frames in the video, but also understands the logic of the space and allows you to enter and control that space.

Google DeepMind initially launched Genie 3 in August last year as a research preview, and now – five months later – with its availability through Project Genie, it is seeking a wider range of user feedback while accelerating the development of even more powerful world models. Competition in this field is growing. Fei-Fei Li released the first commercial product, Marble, with her World Labs late last year, startup Runway also recently launched its world model, and former Meta lead scientist Yann LeCun is also aiming to develop such models with his startup AMI Labs.

How does Genie work?

Researchers at DeepMind used what they call a “Latent Action Model.” Most video generation models simply guess what the next frame will be based on the previous ones. Genie goes deeper.

When the model watches a video game, it asks itself, “What invisible action caused this change on the screen?” Over time, it learned to recognize movement patterns. Even though it had never seen a controller or game code, it understood the concepts of jumping, walking, and interacting. Today, when a user presses a key on the keyboard, Genie generates the next frame of the world in real time that corresponds to that specific action.

Its ability to create from a single image is amazing. You can show it a real-world photo, an artistic painting, or even a child's drawing. Genie will transform that visual information into a game environment with consistent physics. If there's a chasm in the drawing, the model will understand that the character shouldn't walk on air. If there's an obstacle, the character will crash into it.

Unfortunately, we do not have access to the new UI tool, but we did find information on how this new UI tool is used.

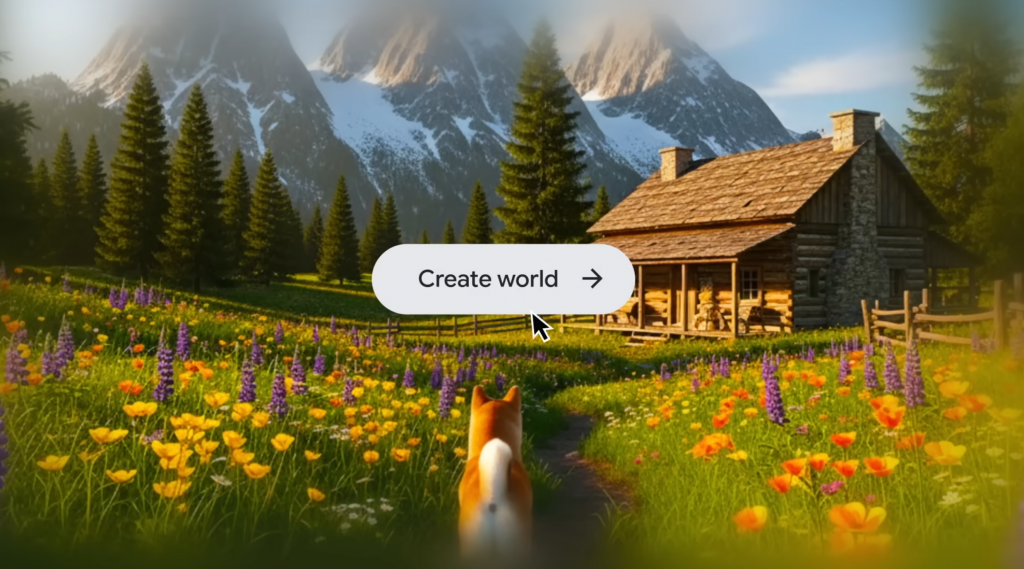

First, the user writes a verbal description of the desired environment and character (or uploads their starting image) into the appropriate fields within the web application. This could be, for example, “a dense tropical rainforest with ancient ruins, the hero is an explorer walking on a suspension bridge”. Google AI then uses the Nano Banana Pro model to create a preview image of the world from the description. The user can further refine this image (add or remove elements, change a certain detail to better match the vision of the world they want). At the same time, they can choose the perspective of the view (first-person or third-person mode).

Once the draft is satisfactory, the user clicks Create and Project Genie generates the entire interactive scene in a matter of moments, placing the user in that world. The environment appears on the screen along with basic controls. Movement is done with standard keys (W, A, S, D to move, spacebar to jump/lift, arrow keys to rotate the view), similar to computer games. A key feature of Genie is that the world is not fully drawn in advance, but is dynamically built on the fly. This means that as the character moves, the AI creates new parts of the landscape, events, and objects in front of the player.

The world is currently rendered in 720p resolution at 24 frames per second.

Each world or generated experience is time-limited to 60 seconds. After one minute, the session ends, which is expected, since it is a computationally intensive task. After the end, the user has several options: they can save a video of their exploration, repeat the experiment, or start a completely new world with a new description.

In addition to creating from scratch, users can also try out pre-made or other people's worlds. Project Genie allows for mixing worlds. The gallery offers a selection of sample worlds that can be downloaded and then modified to your own liking.

While it's impressive to watch the AI create worlds from scratch, we've already mentioned some of the limitations. In addition to the lower resolution and time limit, users say that the interaction between the character and the world around him is very basic. The responsiveness is also worse than we're used to in today's games. In short, a very interesting tool, but symptoms of the development phase are noticeable.

Google emphasizes that Project Genie is being developed in accordance with the principles of responsible development. Abuse is prohibited. Safety filters are built in to prevent users from generating harmful, illegal, or inappropriate content.

Why is this important for the future of artificial intelligence?

You might be wondering if we really need another AI tool, especially for creating video games. The answer is that Genie is not just a tool for games. Some experts believe that these world models are very important if we want to achieve artificial general intelligence (AGI).

For AI to truly understand the human world, it can’t just learn from text. It needs to understand cause and effect. If AI knows that a glass will fall to the floor and break if pushed off a table, that’s a form of intelligence that goes beyond just putting words together into sentences. Models like Genie teach AI physics, spatial orientation, and the logic of action in the real world.

This has huge implications for robotics. Instead of teaching robots to walk in expensive and dangerous real-world environments, we can train them in endless, AI-generated simulations that are visually and physically identical to reality. If a robot can learn to navigate thousands of different Genie worlds, it will be much better prepared to enter your kitchen.

Coming soon to us?

Google is carefully monitoring the rollout of the new tool for now. Only the most avid Ultra subscribers in the US are getting access, while the rest can only admire the footage and wait for wider availability. The company says it plans to gradually make it available to more users and in more regions as the technology matures.